Recently we have upgraded our project to run on EF 6. And this is still in beta in Deb branch. So this has not been deployed to most of our customers. And today I had to solve a bug for older version of our system that is still on EF5.

Long story short: I’ve tried to connect to DB that already had migrations from EF6, but I was connecting with EF5. And cryptic error messages emerged, just like EF loves to throw cryptic error messages, this time it was no exception, excuse the pun.

This time the exception is saying: The provider did not return a ProviderManifest instance. with internal exception saying Could not determine storage version; a valid storage connection or a version hint is required. Which made no sense at that point. Until you spend couple hours trying to figure out what is happening.

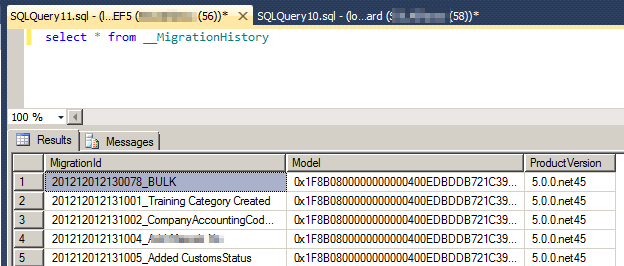

Let me go into EF migration system details slightly. EF stores database state information in __MigrationHistory table that looks like this for EF5:

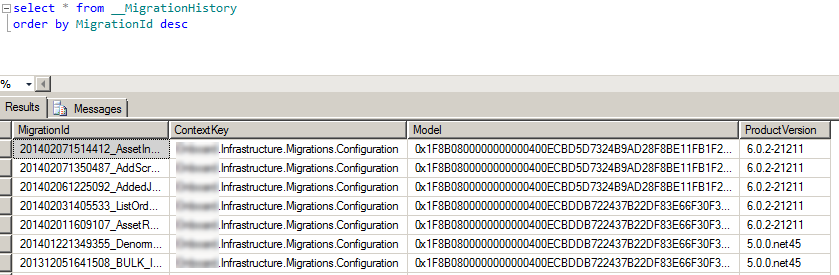

For EF6 the structure of the table have slightly changed:

Notice on the second screenshot there is another column for DbContext type name used for the migration. This is a new feature in EF6 – allows more than one context to live in one project and you can run migrations for both of them. Have not tried this one yet, but time will come pretty soon!

Also once we have run migrations in EF6, the version recorded next to the migration record have changed from 5.xx to 6.xx – this is also visible on the last screenshot.

And if you try to connect to this database with older version of EF, table structure throws the system off it’s tracks. I’ve tried updating version number in data, but it did not change anything, do don’t bother.

I have not found the solution to this issue, other than re-create database migrations in EF5. So be careful when you update to EF6 and run migrations – there is no way back to EF5.