This post is a summary of links I’ve studied about ARM.

One of the things we do for our project – automatic provisioning of services in MS Azure for new clients. Couple years ago we used to do provisioning manually and that took days. Now we have a system that does it all for us – web-based system that talks to Azure API and asks for new websites/databases/storages/etc. to be created for the system we work on. And I’ve been actively writing this system for the last 3-4 months.

I’ve been using Azure Management C# libraries to access Azure API for couple years now. And as far as I could remember, these libraries never actually were out of preview and approved for production. And I had a lot of trouble with these, especially when I took half-year breaks from that project then came back and realised that half the API’s I’ve used are changed.

This time I come back to this problem and I realise that I’ve missed Azure Resource Management band-wagon and the plethora of new libraries. And need to start learning from scratch again (don’t you hate that?).

Now there are 2 ways to access Azure API: Azure Resource Management (ARM) and Classic Azure Management (the old way). ARM is the new cool kid in the block and looks like it is here to stay because new Azure Portal is totally based on this system. See comparison new and old ways.

There are a lot of differences between new and old. Old system required to authenticate through a certificate that you had to attach to your HTTP requests. And you had to manage these certs and all that. I’m sure there were other ways to authenticate, but when I started working with Azure Management API this was the only way that I knew. ARM allows to authenticate via Azure AD where you need to know couple Guids and a password. Here is the overview of ARM

The most radical change that ARM is really based on Resource Groups. And everything you create must be in a group. So you need to create a Resource Group first, then resources. There are benefits to that: you can view billing per group – i.e. put all resources related to a project and you can see how much that project costs you, without having to go through per-item subscription billing. Another massive benefit is access control. Now you can give users access only to a specific group of resources (you could only give access to a subscription before) (Read more about Role-Based Access Control and built-in roles).

Authentication

But I disgress – I’m working with API at the moment. Authentication is slightly easier now. You’ll need to create an application in Azure Active Directory, get a “client secret” from it and do 3-line C# code execution to get an authentication bearer token. Read more about authentication process here (including code sample). And this one shows creation of AD application.

Then for every request to ARM you need to attach this token as an Authentication header to HTTP request: request.Headers.Add(HttpRequestHeader.Authorization, "Bearer " + token);

Requests

Now you don’t even need any libraries – you can form requests yourself pretty easy. You need authentication token as a header, you need to know the URL you need to work with. And then you POST/PUT json-formatted object. And to delete you do a DELETE request to that URL. And URL always maps to a resource – very RESTful indeed.

URL you need to work with looks similar to this:

https://management.azure.com/subscriptions/{subscriptionId}/resourceGroups/{resourceGroupName}/providers/{resourcetype}/{resourceName}?api-version={apiversion}

Here is the sample of URL accessing a website resource:

https://management.azure.com/subscriptions/suy64wae-f814-3189-8742-b53d5a4532cd/resourceGroups/NewResourceGroup/providers/Microsoft.Web/sites/MyHappyWebsiteName?api-version=2015-08-01

But don’t worry about this – you have a Resource browser https://resources.azure.com/ that will tell you exactly the URL you need – just navigate to already existing item and see the generated URL. This site is also going to give you JSON format/data to send through to portal.

Templates

Another great feature of ARM is Templates. Basically you write information about resources you need in JSON, add parameters there and feed that to ARM (either programmatically or through Azure Portal). Though I’ve not found a good programmatical way to use templates – I have seen samples where you need to upload template json file and parameters json file to Azure Storage and tell ARM where to look for. But I’m not convinced about this – sound like too many steps with uploading.

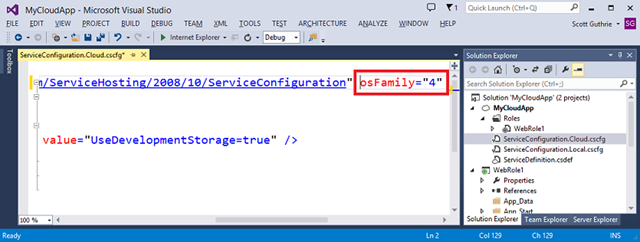

Here is the definition of templates. And you can create them within Visual Studio 2015 or you can copy-paste json from existing objects in resource browser and modify to your needs.

Links