Yesterday I discovered Package Explorer for opening up OpenXML documents, validating and editing. Today I have found OpenXml SDK Tool.

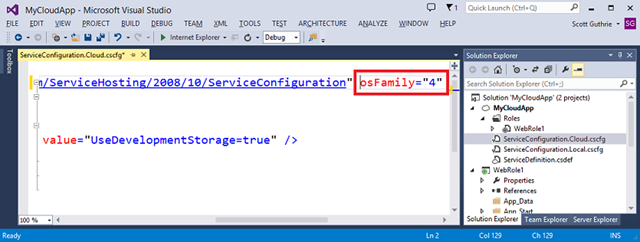

SDK Tool is a great software from Microsoft for every developer working with OpenXml. SDK Tool is similar to Package Explorer, but you don’t have edit functionality. And validation messages are not as good. But you get an instant access to documentation about any possible element you can imagine.

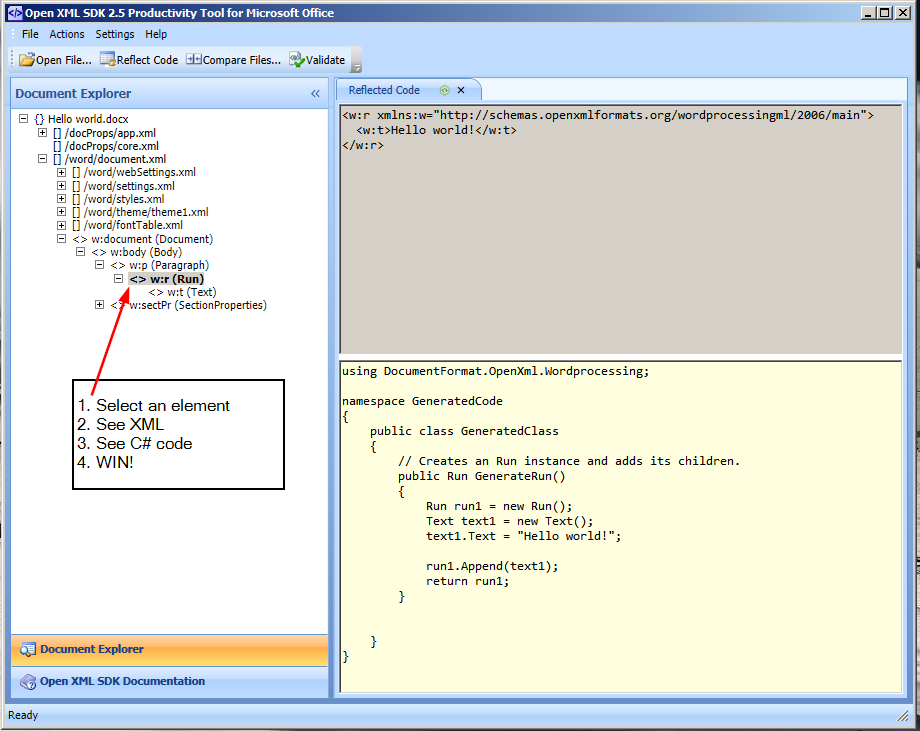

And the killer function is CODE GENERATION! It generates C# code for any element that is available on the page.

You can create a Word Document, open it up in SDK Tool and it will produce you a full C# listing to create exactly the same document from your code! This is just fantastic! Using this tool today I was discovering how things done in a simple way: add page numbers to an empty Word-doc, open it up in SDK Tool, find the part about page numbers (probably this was the hardest part), copy the generated code, slightly refactor and I had page numbers in my generated documents. All it took about 15 minutes and I did not use Google or any other documentation.

Anyway, download SDK Tool from here: http://www.microsoft.com/en-gb/download/details.aspx?id=30425. Select only SDK Tool to download, you won’t need anything else available on the page.

Once installed find Open XML SDK Productivity Tool in your start menu and open a Word document.

You can select any element of the document and see documentation to that, with all available child node types

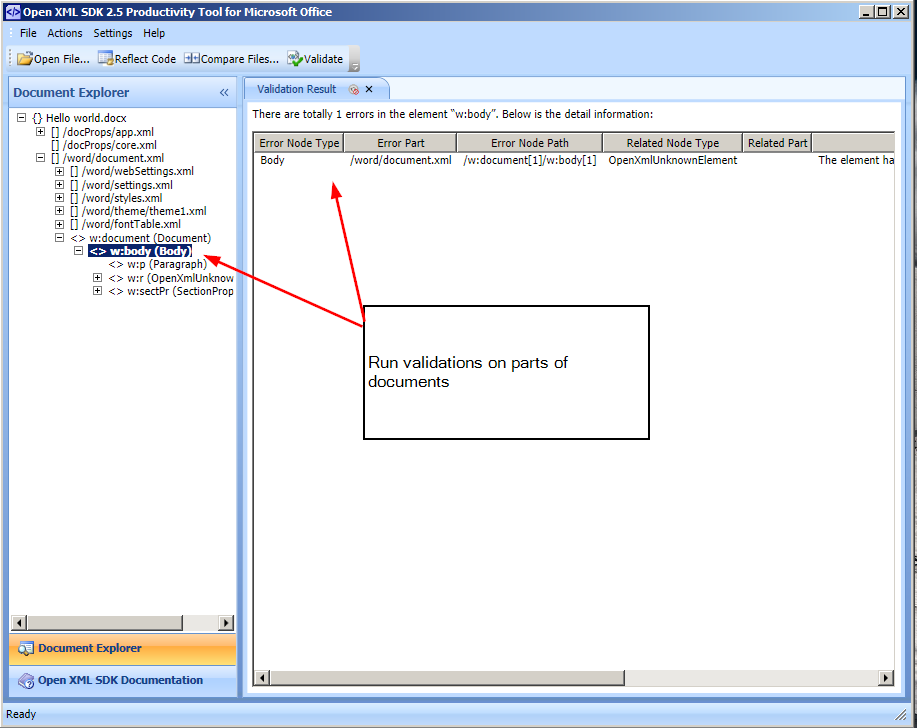

You can run validation of any element in the document:

And the promised killer feature – Generated code:

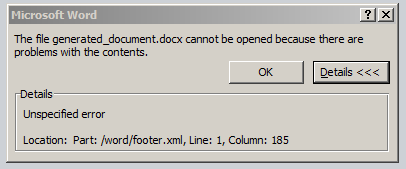

Another quite useful function is to compare the documents. Yesterday I had to do that to find out how a particular feature is implemented. Now, when you have a generated code, this may be not so useful, but you can compare 2 Word documents and it’ll show you the differences in containing XML.